.Cross-Account CI/CD Pipeline for ECS and Lambda

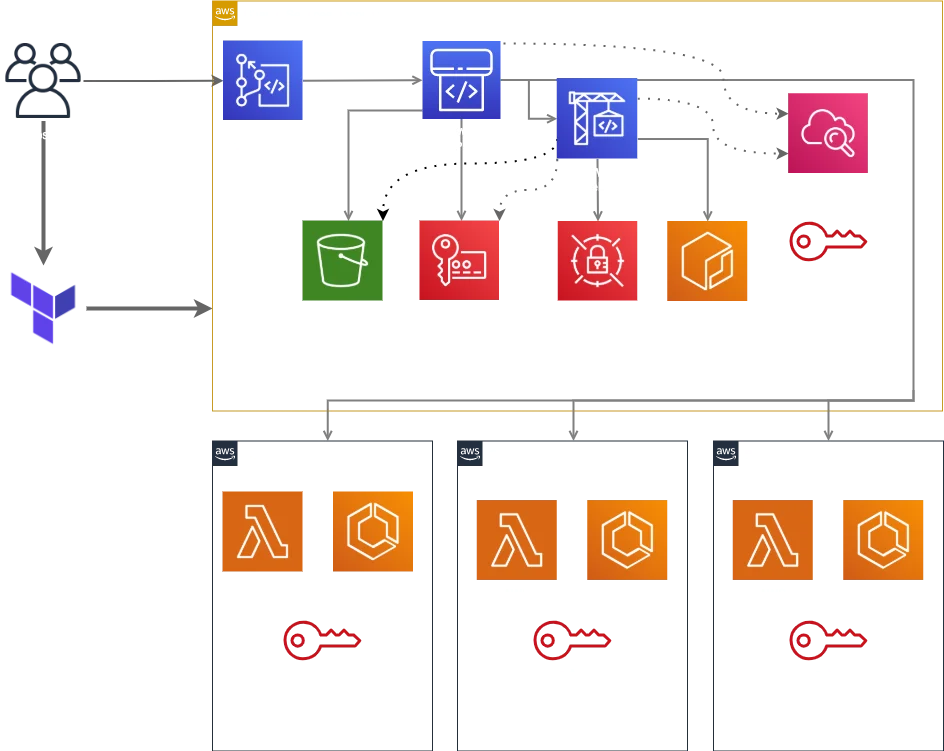

In this blog post, we describe how to set up a cross account continuous integration and continuous delivery (CI/CD) pipeline on AWS. A CI/CD pipeline helps you automate steps in your software delivery process, such as initiating automatic builds, artifacts store, integration testing and then deploying to Amazon ECS Service or Lambda function or other AWS Services.

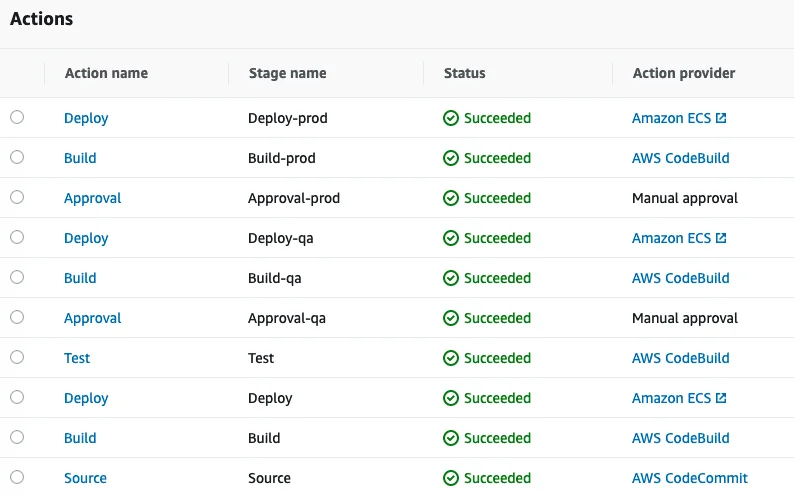

We use AWS CodePipeline, a service that builds, tests and deploys your code every time there is a code change, alongside CodeBuild for specific integration testing and deploying to AWS services which is not yet supported by AWS CodePipeline.

We use CodePipeline to orchestrate each step in the release process, such as getting the source code from Github or CodeCommit, running integration tests, deploying to DEV environment and manual approval for higher environments such as QA and PROD.

One thing to mention is that most organizations create multiple AWS accounts because they provide the highest level of resource and security isolation, this introduces a challenge to create cross account access between different AWS services. So to manage multiple AWS accounts we have the following accounts:

- Shared services account: this account contains S3 buckets, CodeCommit repositories, CodeBuild Projects, CodePipeline pipelines, CloudTrail, Config, Transit Gateway and other shared services between accounts. Also this account contains cross account roles to access Dev, QA and Prod accounts. This allows CodePipeline and CodeBuild to access their services and resources for the pipeline to succeed.

- Dev account: this account contains a sandbox for developers and dev environment for software. The shared services account has specific access to this account, such as ECS services and Lambda functions.

- QA account: this account has the test environment for making sure that software works as intended.

- Prod account: this account has the production environment.

One note to mention is that we are avoiding hard coded secrets values and use AWS Secrets manager as much as possible to retrieve passwords, API keys or other sensitive data.

ECS CI/CD pipeline

Before you can use CodePipeline to update your ECS service in the targets account, there are set of permissions including resource based policies and cross account roles which need to be set up first. After that CodePipeline supports updating ECS Services in local or target accounts. Note that you can use CodeDeploy for more detail deployment types, but for now we are using the simple CodePipeline deployment.

Prerequisties

When using CodePipeline or any other AWS services you need to make sure that permissions to access target services or accounts are permitted, and vise versa, target accounts need to access AWS resources in the automation or shared services account, we can achieve access using cross account roles and resource based policies.

Resource based policies

CodePipeline uses S3 bucket as an artifact store and use a KMS key to encrypt these artifacts. In order to let other accounts and their services access these s3 buckets we need to create a CMK KMS key first. The account's KMS key can't be accessed from other accounts so we use a resource based policy for CMK which allows us to use a resource based policy and external principals (roles or root access from the target account) which in our case are the dev, qa and prod accounts to access this artifact store bucket. Beside that we need to use a S3 bucket policy (resource based policy) to allow access from external principals. This way we can make sure that dev, qa and prod accounts can access artifact s3 bucket and use CMK KMS keys to decrypt the content to use it.

The same approach will be followed for ECR. We will update it's resource based policy to allow ECS services in dev, qa and prod accounts to pull the image and use it with containers definitions.

ECR policy

In the shared service account update the ECR Policy to allow access from dev, qa and prod accounts.

variable "ecr_policy_identifiers" {

description = "The policy identifiers for ECR policy."

type = list(string)

default = []

}

data "aws_iam_policy_document" "ecr" {

statement {

sid = "ecr"

effect = "Allow"

actions = [

"ecr:*"

]

principals {

type = "AWS"

identifiers = var.ecr_policy_identifiers

}

}

}

resource "aws_ecr_repository_policy" "this" {

repository = aws_ecr_repository.this.name

policy = data.aws_iam_policy_document.ecr.json

}KMS policy

In the shared service account update KMS Policy to allow access from dev, qa and prod accounts.

variable "kms_policy_identifiers" {

description = "The policy identifiers for kms policy."

type = list(string)

default = []

}

data "aws_iam_policy_document" "kms" {

statement {

sid = "kms"

effect = "Allow"

actions = [

"kms:*"

]

principals {

type = "AWS"

identifiers = var.kms_policy_identifiers

}

}

}

resource "aws_kms_key" "this" {

...

policy = data.aws_iam_policy_document.kms.json

}S3 policy

In shared service account update S3 Policy to allow access from dev, qa and prod accounts, and make sure you are using the CMK KMS key you created with the S3 bucket.

variable "s3_policy_identifiers" {

description = "The policy identifiers for s3 policy."

type = list(string)

default = []

}

data "aws_kms_key" "s3" {

key_id = var.kms_master_key

}

data "aws_iam_policy_document" "s3" {

statement {

sid = "s3"

effect = "Allow"

principals {

identifiers = var.s3_bucket_policy_identifiers

type = "AWS"

}

actions = [

"s3:Get*",

"s3:List*",

"s3:Put*"

]

resources = [

"arn:aws:s3:::${local.bucket_name}",

"arn:aws:s3:::${local.bucket_name}/*"

]

}

}

resource "aws_s3_bucket_policy" "s3" {

...

policy = data.aws_iam_policy_document.s3.json

}Cross Account roles

After we setup our resource based policies for S3, KMS and ECR we need to allow CodePipeline in the shared service account to access ECS Service in dev, qa and prod accounts. To do this we need cross account roles in dev, qa and prod accounts that allow shared service account to assume this role with the appropriate permissions added to these roles, and on the other hand we need a policy in the shared service account which allows assume to these roles. For example we can attach a policy to the CodePipeline role to allow it to assume targets roles. Now after all of that we can use role_arn to assume roles in targets accounts to update ECS services.

You need to create a cross account role in dev, qa and prod, and you need to add a policy to allow CodePipeline role to assume these roles.

principal_arns = ["arn:aws:iam::SVC_ACCOUNT_ID:root"]

data "aws_iam_policy_document" "assume" {

statement {

effect = "Allow"

principals {

type = "AWS"

identifiers = var.principal_arns

}

actions = ["sts:AssumeRole"]

}

}After that you can use these roles with CodePipeline in the shared service account.

stage {

name = "Deploy-qa"

action {

name = "Deploy"

category = "Deploy"

owner = "AWS"

provider = "ECS"

input_artifacts = ["image_definitions_qa"]

version = "1"

role_arn = var.qa_role_arn // assume role

configuration = {

ClusterName = var.ecs_cluster_name

ServiceName = var.ecs_service_name

FileName = "image_definitions.json"

}

}

}

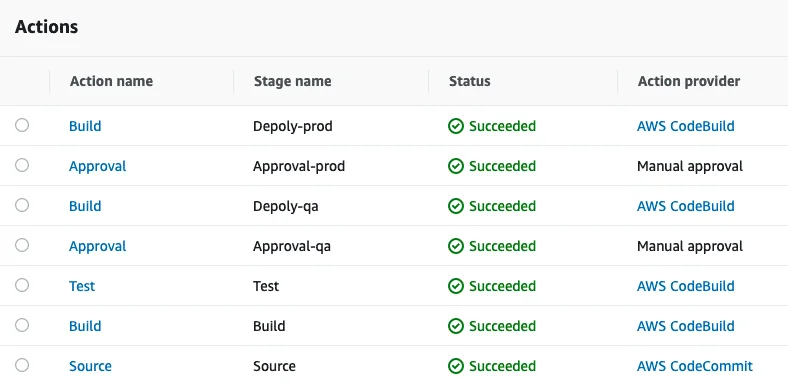

Lambda functions CI/CD pipeline

Lambda functions updating is different from ECS Service. There is no direct way to do it with CodePipeline thus we use a CodeBuild project. From there we assume into cross accounts roles we mentioned earlier and use aws cli to update Lambda versions and aliases based on the pipeline. A simple CodeBuild buildspec file could be as follows:

version: 0.2

phases:

pre_build:

commands:

- aws sts get-caller-identity

- TEMP_ROLE=`aws sts assume-role --role-arn ${assume_role_arn} --role-session-name test --region ${region} --endpoint-url https://sts.${region}.amazonaws.com`

- export TEMP_ROLE

- echo $TEMP_ROLE

- aws sts get-caller-identity

- export AWS_ACCESS_KEY_ID=$(echo "$${TEMP_ROLE}" | jq -r '.Credentials.AccessKeyId')

- export AWS_SECRET_ACCESS_KEY=$(echo "$${TEMP_ROLE}" | jq -r '.Credentials.SecretAccessKey')

- export AWS_SESSION_TOKEN=$(echo "$${TEMP_ROLE}" | jq -r '.Credentials.SessionToken')

- echo $AWS_ACCESS_KEY_ID

- echo $AWS_SECRET_ACCESS_KEY

- echo $AWS_SESSION_TOKEN

- echo Enter pre_build phase on `date`

- pip install awscli --upgrade --user

- echo `aws --version`

- cd lambda

- zip lambda.zip ${lambda_python_file_name}

build:

commands:

- echo Enter post_build phase on `date`

- aws lambda update-function-code --function-name ${lambda_function_name} --zip-file fileb://lambda.zip --region ${region}

- LAMBDA_VERSION=$(aws lambda publish-version --function-name ${lambda_function_name} --region ${region} | jq -r '.Version')

- echo $LAMBDA_VERSION

- aws lambda update-alias --function-name ${lambda_function_name} --name dev --region ${region} --function-version $LAMBDA_VERSION

artifacts:

files:

- lambda/lambda.zip

discard-paths: yesWhen run this CodeBuild within CodePipeline, you can test, package, publish version and update you alias. If anything goes wrong, simply jump back to working version.

We use cookies on our website. Some of them are essential,while others help us to improve our online offer.

You can find more information in our Privacy policy