.Event-Driven Architecture using EventBridge

Why use Event-Driven architecture in the first place?

We are all used to the Request-Response way of communicating between the Client and API. The client sends an HTTP request to the server, awaits the response and proceeds with its life. This is all cool until we get into a situation where, let’s say, we have a request which involves synchronous calls to 5 microservices. What happens if something goes wrong in one of the services? Maybe a request times out or maybe our app just stays hanging.

To prevent this from happening, some smart developers (not me) have come up with the idea of the Event-Driven approach.

What exactly does Event-Driven mean?

It is a concept of communicating between different services asynchronously using events instead of requests. There are 3 key components:

- Event is a change of state in the system

- Event producer transmits events to consumers via event channels (in our case AWS EventBridge )

- Event consumer processes the event accordingly

How to implement EDA using AWS EventBridge?

EventBridge is AWS’ serverless pub/sub service made to connect different types of event sources (SaaS applications, internal AWS services or custom applications).

To get our terminology right:

- Event Bus receives the events we send and delegates them accordingly to their respective consumers

- Event Record is a formatted dataset received when the event was triggered

- Event Rule is a rule which determines where the received event will be forwarded.

The flow looks something like this:

- Event producer (Client, Microservice, internal AWS, etc) sends an event to Event Bus

- Event bus recognizes which Event Rule applies to the received Event Record

- The event is forwarded to its consumer

Let's have a look at an example:

To depict this with more context, let’s say we have an API for creating blog posts.

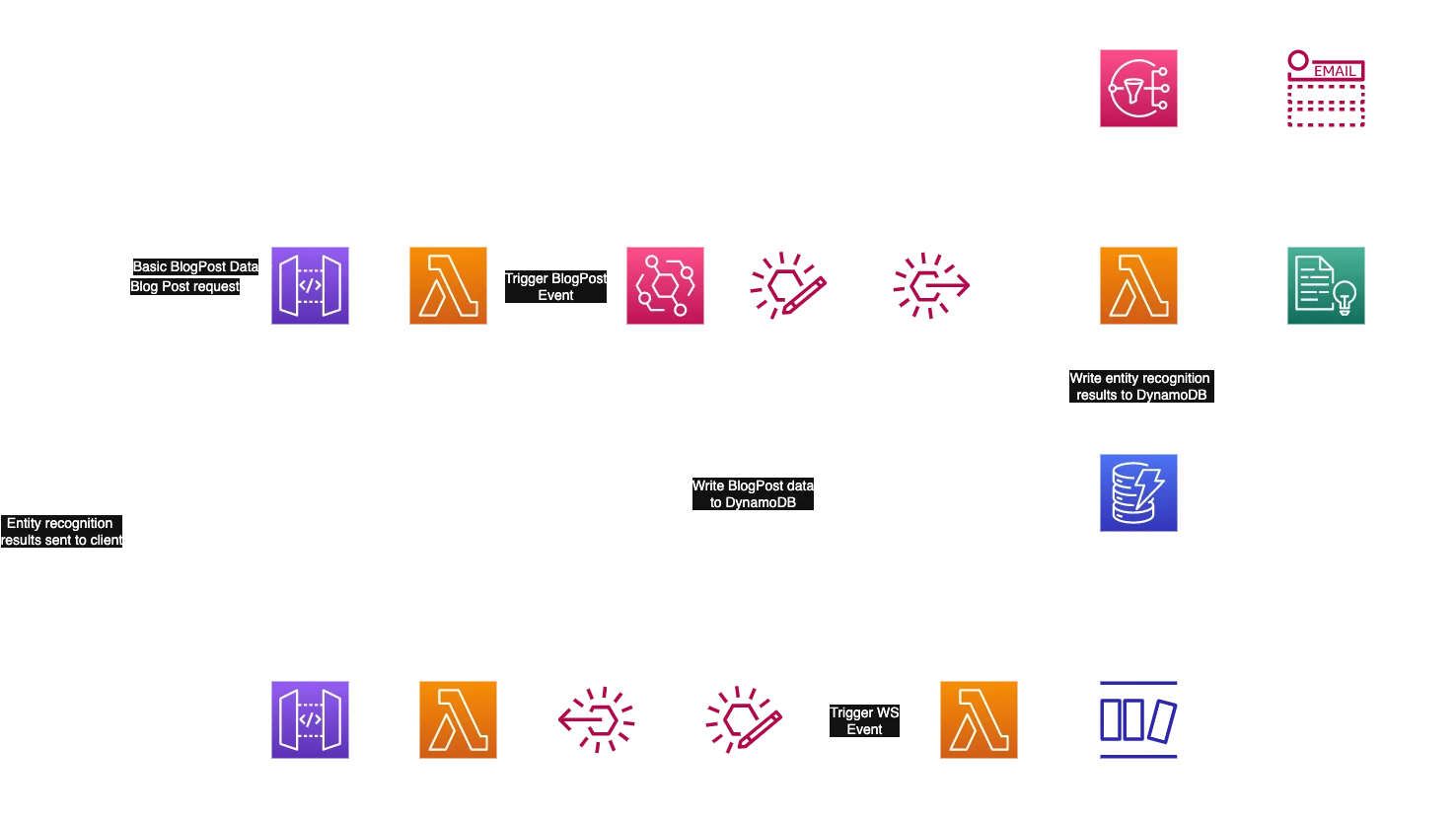

Use case: A user writes a blog, submits it, our API is called, the received blog post data is validated and saved to DB and a response is sent to our user, afterward an event is triggered for the further document processing, after which the additional document data is added to our DB as well and it gets sent to our user via WebSocket.

Let us slow down and explain the flow bit by bit :)

The initial action is the HTTP Request to our API Gateway which triggers our Lambda for creating a Blog Post - initiated by our User.

Let’s say our Blog Post object which is getting sent in the request looks like this:

{

"author": "hunter s thompson",

"title": "Title",

"body": "Joe is going to Spain",

"topic": "summer"

} We then receive that object and validate it before we write it to DynamoDB. This way we make surer that even if some of our later steps fail, our blog is still saved in DB. Afterwards, we trigger an event on our EventBus and the response with the basic BlogPost info is returned to our user.

Basic BlogPost info would like something like this:

{

"id": "blogPostId",

"createdAt": "timestamp",

"author": "hunter s thompson",

"title": "Title",

"body": "Joe is going to Spain",

"topic": "summer"

}

What does the flow so far mean for us? We have written our key BlogPost data to DynamoDB so it can be displayed on the client, now we can proceed with the next steps using our Event Bus - Sending an email notification to our Subscriber using SNS and enriching our BlogPost with AWS Comprehend entity recognition.

The Event Record received is then sent to 2 targets according to our blogPostCreation Rule:

- SNS (Simple Notification Service)

- Entity recognition Lambda

These 2 steps of our flow will be executed asynchronously.

The SNS will simply send an email notifying the users who are subscribed to the topic our BlogPost is about. - in our case - "summer".

The entity recognition Lambda will make a synchronous call to AWS Comprehend and update the BlogPost in DynamoDB with the newly acquired data.

The BlogPost in the DB now looks like this:

{

"id": "blogPostId",

"createdAt": "timestamp",

"author": "hunter s thompson",

"title": "Title",

"body": "Joe is going to Spain",

"topic": "summer",

"entities": {

"locations": ["Spain"],

"persons": ["Joe"]

}

}

Now we have to deliver these new results to our client using DynamoDB Stream, EventBus and API Gateway WebSocket.

DynamoDB Stream listens to the changes made in our BlogPost table, so it will catch the data written by our Entity Recognition Lambda. It will then take that data and send it to the WebSocket Event Bus.

We could’ve used the same Event Bus we have used before, but let’s imagine that our app is larger than we show it here and that we have tens of WebSocket events - in a case like that, it’s a lot more intuitive to have a dedicated Event Bus for WebSocket events.

So, our WebSocket Event Bus receives an event from DynamoDB Stream Handler Lambda and according to the BlogPostUpdate Rule, sends it to our WebSocket Handler Lambda which then sends the data to our client via API Gateway WebSocket.

I hope I've managed to make an interesting intro to EDA and encourage you to try implementing it in your own project :)

To sign off, I’ll use my favorite example to explain why I think async architecture is so cool:

You want to order a pizza, but the whole process is synchronous - You call the pizza place, you say which pizza you want, but you can’t hang up, you have to wait on the phone until the pizza arrives to your place - stressful innit?

We use cookies on our website. Some of them are essential,while others help us to improve our online offer.

You can find more information in our Privacy policy