.SaaS Multi-tenant application in EKS

Multi-tenant SaaS systems provide a lot of benefits for customers. These types of applications are cost-effective, easily scalable, can be easier to maintain and also very agile. In this blog, we will dive a bit deeper into the architecture of multi-tenant SaaS EKS applications, how new tenants are onboarded to the system, and how business and technology parts are strongly connected in the SaaS ecosystem. By the end of this, you will be able to understand some of the core concepts of SaaS applications and how to achieve them using EKS and some other AWS services.

Onboarding new Tenant

As a SaaS provider, we want a smooth and seamless experience for our customers (tenants). Customers should input as little information as possible in registration form and be productive immediately. Besides basic information, customers need to pick a pricing plan for the software that we provide. Under the hood, the SaaS provider needs to provision new tenants, perform all the configurations, assign roles, and policies, and deploy infrastructure.

When using the Silo model of isolation then this job has much more complexity and SaaS provider have to create various automations for deploying new tenants since infrastructure and service combinations will be different from tenant to tenant. But when we are using Amazon EKS to build multi-tenant SaaS applications we also have a unified path for deploying new tenants but we are lacking some tenant-specific requests. For example, within the established pipeline we are not deploying Amazon SQS for each tenant, but if there is one specific customer who would like to use it, then we can additionally add that component within an infrastructure. As you can already see, there are a lot of things going on behind the scenes but the customer is only registering and picking a pricing plan. Let’s check how that would look on the diagram.

As previously mentioned, when the process of onboarding a new tenant is started few things happen. On the upper part of the diagram, we can see the “User management” service with three core AWS services: Cognito, DynamoDB and IAM. DynamoDB is used to store user-related information such as User Pool, Identity Pool, admin user (tenant owner), and custom claims which are attributes for tenants that are going to be managed within our system. It is important to mention that the “User management” service is going to create a tenant administrator (owner) in the newly created user pool.

On the lower part of the diagram we have again DynamoDB, but this time we keep tenant information such as Tenant ID, Pricing plan, and Identity mapping since we as SaaS providers need to know which user is bound to which tenant. Of course, DynamoDB can be shared and “User” and “Tenant” management services can store data within different tables.

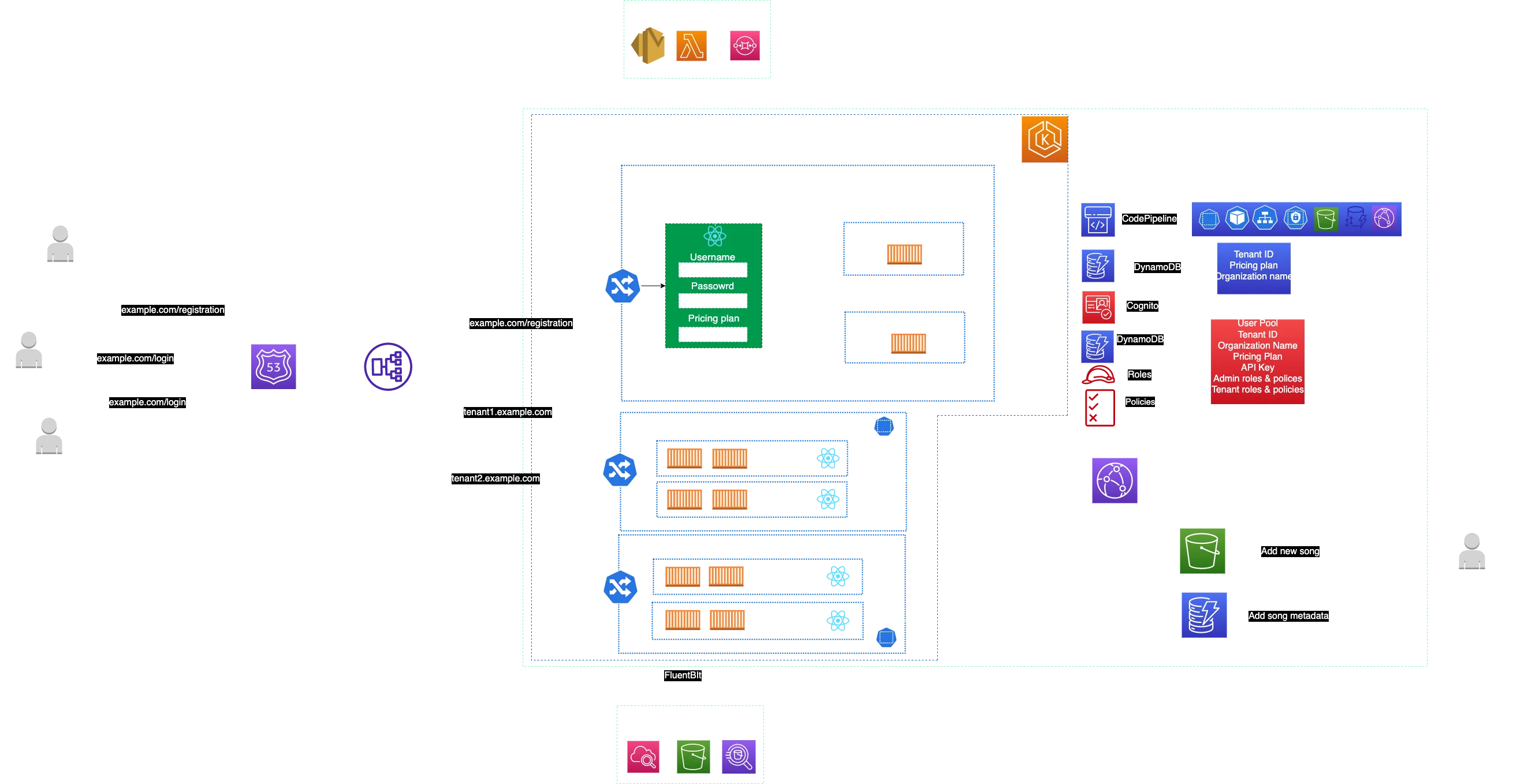

So far we talked about user creation, and where we store information but what about actual applications and general resources that are tenant-specific? Since we are using EKS and referring to a previous blog post (namespace-per-cluster isolation model), it is obvious that Amazon CodePipeline will create and deploy all resources needed. Additionally, it will deploy microservices, configure isolation, and create Kubernetes native resources such as Pod, Service, Ingress, Persistent Volume (if needed), Roles, Horizontal Autoscaler, and many more depending on the requirements such as DynamoDB table, S3 bucket, CloudFront distribution and so on.

Still not clear? Okay, let’s bring an example to the table. Imagine you are a SaaS provider and you’ve created a software for listening to music. New customers registers and picks up the pricing plan. This user is called an admin user or tenant owner and is allowed to perform everything related to the account. On the other hand, the software admin can manage other users within the created account and give them listen-only access for example. So other users within that same account are not allowed to create a playlist, choose favorite musicians, etc. Also, you are monitoring all tenants, performing data analytics, querying logs, and based on that reaction whether you need to contact some specific user or other teams within the SaaS provider. Whenever a new user registers a pipeline that the DevOps team created, it will deploy all resources needed so that when a new user logs in immediately after creating an account all features are available to use. In general, when you think about SaaS think about business and technical parts at the same time.

Under the hood

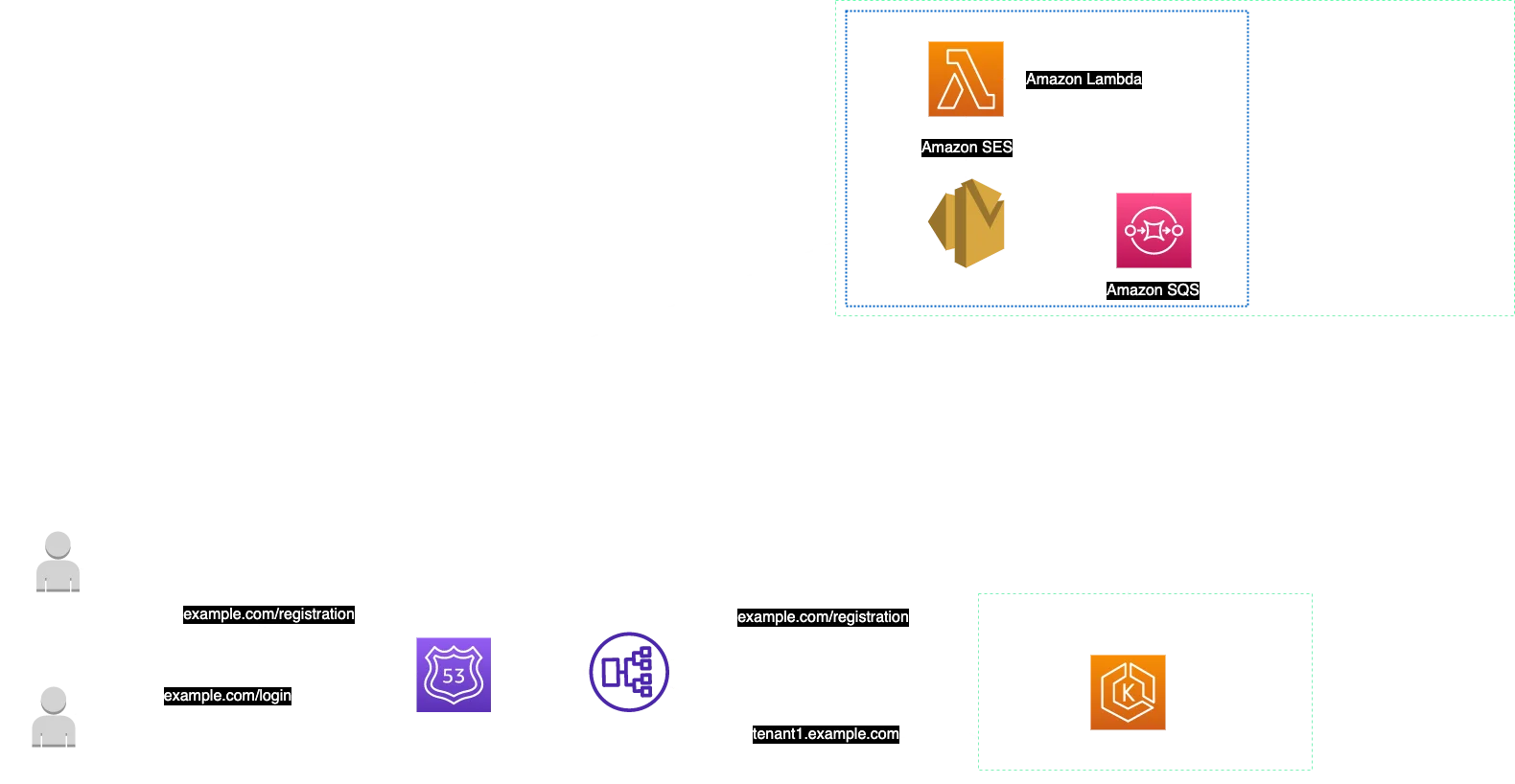

Now that we understand how onboarding a new tenant is working, let’s dive a bit more into the infrastructure part of the story. As we already know microservices are deployed using EKS but how are those microservices exposed to the public? Well, when a user enters URL example.com/register Amazon Route 53 is in the first line of “defense” to get the request and check where it should be routed to. Since Elastic Load Balancer can be Route 53 origin, the user’s request is sent to ELB which then knows which microservice should handle the request. Do not forget about limits when using shared resources since that can influence the architecture itself. For example Application Load Balancer can have 100 target groups and 50 listeners. So whenever you think about designing multi-tenant SaaS solution bring shared resources quotas to the table from day one.

Because the customer needs to have the best experience and start using the software as soon as possible, the software is immediately informed that an account has been successfully created. This serverless block of architecture for welcomming new customer, scales automatically and as soon as software reaches thousands of customers, the Amazon SQS queue will keep all requests and then process them using the Lambda function and Amazon SES service. Take a look at the diagram before we continue.

Between Load Balancer and Kubernetes Pod itself, we have Ingress. Ingress has a set of rules and is used to expose HTTP and HTTPS traffic from public-facing components to specific containers. Tenant namespace can have one or many containers that run pieces of different or the same code.

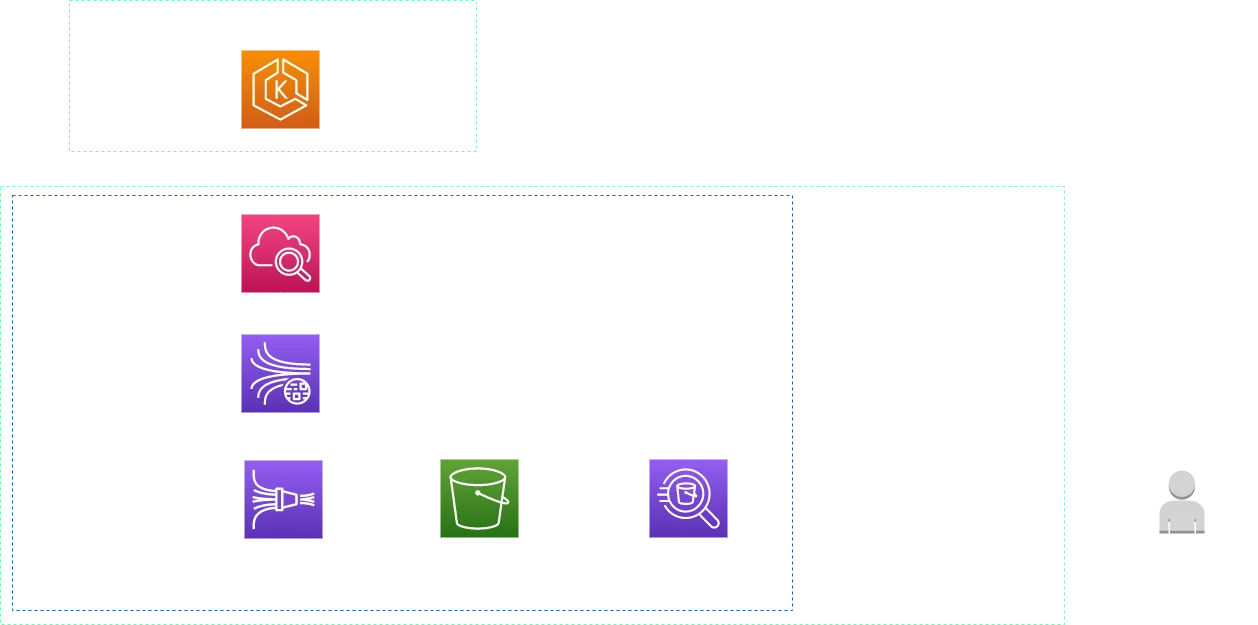

As a sidecar container, there is FluentBit which collects all the logs from every Pod within the namespace. Collected logs from all Pods and namespaces are sent to Kinesis Data Stream and then Kinesis Firehose. Logs are archived in an S3 bucket and we can use Athena for querying logs.

Let’s not pay too much attention to the implementation of the monitoring part since this can be implemented in various ways, but it is important to mention that a SaaS provider has a centralized place for all tenants which helps us to manage them much easier. With this architecture, agility is improved as well as customer satisfaction. Following all metrics and how the system is performing, SaaS providers can help customers have better experiences. For example, if one tenant is using 30% of DynamoDB resources and all other tenants around 5%, the SaaS provider needs to check whether something is wrong with a specific tenant or from an infrastructure point of view.

If we remember our music app, DynamoDB would help us to store metadata for songs and an S3 bucket to store actual song files. S3 bucket and DynamoDB are shared among tenants, but each tenant will have a dedicated folder and objects with tenant-specific prefixes and tables to be isolated from others. Also as the monitoring system is shared we only deploy new FluentBit containers to collect logs for that tenant and send them to the S3 folder. Don't forget to use prefixes all the time to have better scalability. Let’s say that during CodePipeline deployment after each registration new table and folder are created as part of a workflow, but what if our SaaS product reaches millions of users, how would we be able to make every customer satisfied when retrieving songs from the S3 bucket? The best way is to cache them and reduce latency when a new user wants to listen to the same song and that is why we use CloudFront.

This architecture allows SaaS providers effective insight into all namespaces and application instances. It influences faster feedback and better communication between customers and SaaS providers which results in frequent feature releases and rapid market response. Removing features, deploying new ones, and pivoting very quickly to paths where the market is going is something that is a great advantage.

Core SaaS components

From the architecture diagram shown above, we can see a few important core components of SaaS architecture: tenant onboarding, application and operations. Tenant onboarding as a component is isolated within the same EKS cluster in a namespace with its own resources and infrastructure. It is a public-facing microservice that is very simple on the outside but can be very complex from the inside since a lot of things are going on in the background as we already saw.

Within the application part, we are dealing with identity, tenant isolation, and data partitioning. It's very important how identity is provided and how it flows inside our system because we need to know after each user logs in which tenant is bound to that user, and this can influence the infrastructure part of the story.

Tenant isolation is generally achieved using Kubernetes Namespaces but we still have a lot of additional configurations on Amazon IAM, S3 DynamoDB, Cognito, and other services. Data can be partitioned in different ways, however, in example we saw a shared S3 bucket and DynamoDB where we have separated tables and folders for each tenant.

Operations mean monitoring the SaaS system in general as well as individual tenants and reacting to certain things and improving services. Here we can perform various data analytics, management operations, billing, etc. So we are talking about business and technical parts at the same time since we are improving both. Previously explained is illustrated in the image below.

Pros and cons of using EKS for multi-tenant SaaS system

What are the advantages and disadvantages of using EKS for building multi-tenant SaaS applications? Kubernetes has resources such as namespaces which are “isolated” by their nature so some part of isolation is already done, however, there are still some configurations based on how complex the system is and how many resources are included beyond EKS. Even though the namespace helps us isolate compute resources the isolation is not complete. On the other hand, EKS is a managed service so the control plane is not something that you should worry about, and also EKS provides high availability and scalability to the control plane so you as a SaaS provider can focus more on worker nodes and other parts of the infrastructure. EKS works great with Elastic Load Balancer so it helps to automate balancing the load. One of the downsides would be that the outage will be distributed through all tenants. If infrastructure will be almost the same for every tenant, this approach for building multi-tenant SaaS can be a very good option. However, if infrastructure and features differ from tenant to tenant then it would be better to go with some type of silo model where you can have much more customizations.

Conclusion

This blog post dove deeper into the implementation of multi-tenant SaaS applications using Amazon EKS and with help of other AWS services. Now it should be very clear how the business strategy and technology part of SaaS solutions are combined and complement each other. Customer satisfaction is the number one priority for SaaS providers and EKS allows them to deploy not only new tenants but new features very rapidly. When using EKS we can logically isolate tenants in the shared underlying infrastructure but of course it has ups and downs. Besides that, we learned that other components of the SaaS system help us welcome new customers and monitor their resource usage to improve communication and pivot based on customer feedback. In the end, I want to remind you to think of this as an example to learn the most important concepts of multi-tenant SaaS systems. Thanks for reading and I hope you enjoyed it!

We use cookies on our website. Some of them are essential,while others help us to improve our online offer.

You can find more information in our Privacy policy